New Machine-Learning Tactic Sharpens NIF Shot Predictions

July 8, 2021

Design physicist Kelli Humbird presents a talk on inertial confinement fusion (ICF) at the Women in Data Science regional event at LLNL in March 2020. Credit: Holly Auten

Design physicist Kelli Humbird presents a talk on inertial confinement fusion (ICF) at the Women in Data Science regional event at LLNL in March 2020. Credit: Holly Auten Inertial confinement fusion (ICF) experiments on NIF are extremely complex and costly, and it is challenging to accurately and consistently predict the outcome. But that is now changing, thanks to the work of LLNL design physicists.

In a paper recently published in Physics of Plasmas, design physicist Kelli Humbird and her colleagues describe a new machine learning-based approach for modeling ICF experiments that results in more accurate predictions of NIF shots. The paper reports that machine learning models that combine simulation and experimental data are more accurate than the simulations alone, reducing prediction errors from as high as 110 percent to less than 7 percent.

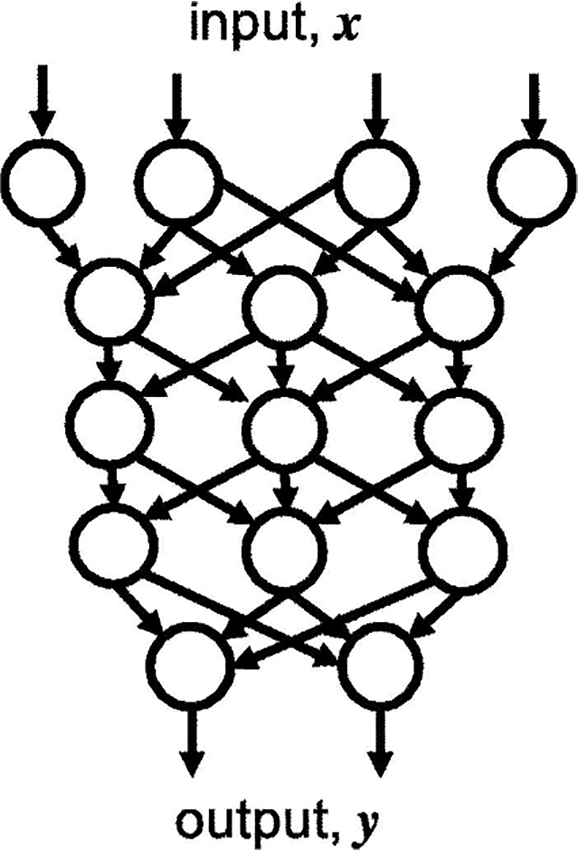

This illustration of a neural network shows how inputs are mapped to outputs via a series of nonlinear transformations through the layers of the network. The model is trained by learning from a supervised dataset of the inputs and corresponding outputs.

This illustration of a neural network shows how inputs are mapped to outputs via a series of nonlinear transformations through the layers of the network. The model is trained by learning from a supervised dataset of the inputs and corresponding outputs. “This paper on cognitive simulation models leverages a technique called ‘transfer learning,’ ” said Humbird, lead author of the paper, “that lets us combine our simulation knowledge and previous experimental data into a model that is more predictive of future inertial confinement fusion experiments at NIF than simulations alone.”

Humbird’s paper, “Cognitive simulation models for inertial confinement fusion: Combining simulation and experimental data,” was the subject of an invited speaker presentation at the 62nd annual APS Division of Plasma Physics 2020 meeting, held virtually on Nov. 9-13, 2020.

ICF experiments produce a high neutron yield by compressing a small spherical capsule filled with deuterium and tritium (DT), leading to conditions favorable for fusion reactions. Because of the complex multiphysics nature of the problem, modeling NIF ICF experiments is especially demanding. Even with modern supercomputers like LLNL’s Sierra, computer simulations of ICF experiments aren’t perfect.

“In short,” Humbird said. “our models of ICF experiments are very good, but not perfectly predictive across the broad range of design space our NIF experiments span. To accurately predict the outcome of future NIF experiments, we need to adjust our model based on what we’ve observed in previous experiments.

“My talk explored transfer learning as a way to ‘correct’ simulation predictions by essentially learning the difference between what our simulations predict and what we actually observe.”

A neural network is first trained on a variety of simulations to teach it the basics of ICF and how different measures, such as yield and temperature, are related. This network by itself can efficiently mimic the results of the computer code. Then, a portion of the neural network is retrained on NIF experimental data, allowing it to adjust its predictions of performance.

“The result is a model that predicts the outcome of some of our most recent experiments with significantly higher accuracy than the simulations alone,” Humbird said.

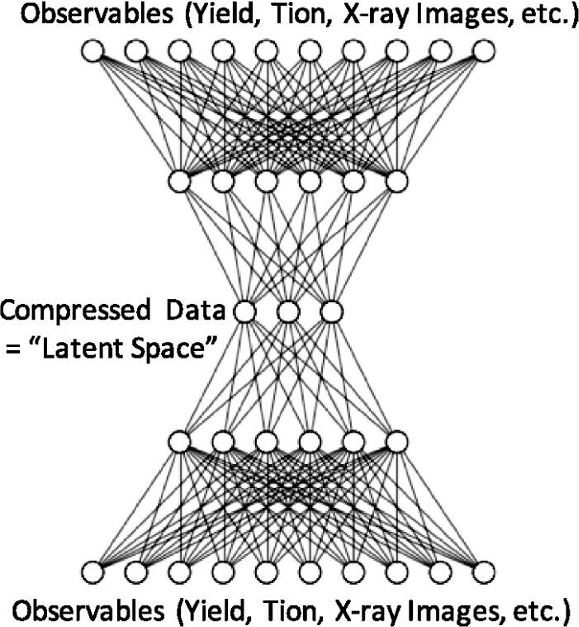

This illustration of an autoencoder neural network shows how autoencoders take as input to the model a large set of correlated data, and non-linearly compress this information into a lower dimensional latent space via the encoder portion of the model. The decoder then expands the latent space vector back to the original set of data; the model is trained by minimizing the reconstruction error.

This illustration of an autoencoder neural network shows how autoencoders take as input to the model a large set of correlated data, and non-linearly compress this information into a lower dimensional latent space via the encoder portion of the model. The decoder then expands the latent space vector back to the original set of data; the model is trained by minimizing the reconstruction error. According to the paper, transfer learning has reduced the mean relative prediction error on the test data from 9 percent to 4 percent for bang time—the time of peak neutron emission that characterizes the speed of the ICF implosion; 50 percent to 14 percent for burn width—the length of time the implosion is producing neutrons; 73 percent to 3 percent for log DT neutron yield; 200 percent to 7 percent for DT ion temperature; 87 percent to 7 percent for log deuterium-deuterium (DD) neutron yield; 300 percent to 7 percent for DD ion temperature; and 14 percent to 8 percent for the downscatter ratio—the ratio between the number of high-energy neutrons and lower-energy neutrons that have been scattered through interactions with the hydrogen isotopes in the fuel, an indication of fuel density. This reduction in errors was achieved after retraining on 20 experiments.

“One of the big benefits of this model is that it can be updated every time we carry out an experiment,” Humbird said, “getting smarter and more accurate as we acquire more data and explore more of the NIF design space.”

She said the Lab has been using this transfer learning model since November to predict NIF experiments, “and we’ve observed the prediction error generally decreasing as we continue to acquire more data.”

Hydra Python Deck (HyPyD) is an interface to the radiation hydrodynamics code Hydra that is intended to help researchers configure and run a wide variety of simulations in a broad array of NIF experiments.

“HyPyD really enabled this work,” Humbird said. “It required running a lot of complex integrated hohlraum simulations quickly without human intervention. HyPyD made the process very simple and straightforward.”

Lab design physicist Jay Salmonson, one of the primary developers of HyPyD, said, “Kelli’s suites of simulations for training data are precisely the type of use for which HyPyD is intended. Previously, it would have taken prohibitively long to configure and set up such an array of simulations and would have required an infeasible number of hours to manually fix and restart finicky simulations that might have crashed during their runs.”

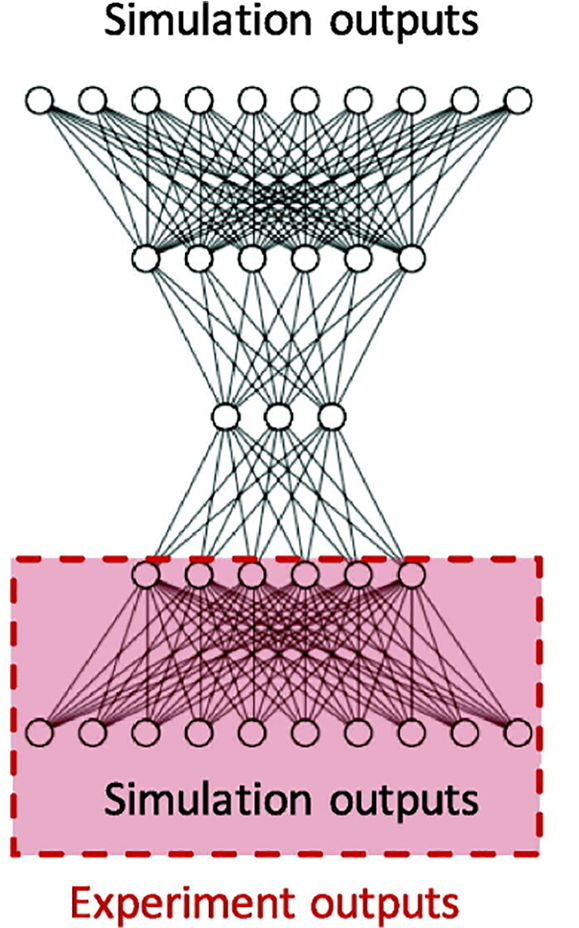

This illustration shows how an autoencoder is transfer-learned from decoding to experimental outputs rather than simulation outputs.

This illustration shows how an autoencoder is transfer-learned from decoding to experimental outputs rather than simulation outputs. Humbird explained that the previous high error rates stemmed from the difference between running HyPyD simulations and designing new ICF experiments.

The HyPyD simulations this model uses are 1D simulations that have an error rate as high as 110 percent, because they assume the experiments are nearly perfect—no asymmetries, no engineering features or imperfections in the capsule quality, and they haven’t been adjusted to any recent experimental data.

In designing new ICF experiments, ICF researchers typically run high fidelity 2D simulations that are tuned to match prior experiments in a single campaign. The proposed transfer learning technique leverages computationally inexpensive low fidelity simulations and experimental data that spans more than half a dozen campaigns. This results in a model that reduces the 1D simulation error from over 100 percent to less than 10 percent for many quantities of interest, and is accurate over the full design space spanned by NIF ICF experimental data.

“These are the models that are ‘very good, but not perfect’ at representing experimental reality,” Humbird said.

Design physicist Luc Peterson said Humbird’s paper “is an inflection in how we can do predictive science at the NIF.

“If we model an experiment and our simulations don’t agree with what we measure, it’s very hard to coax a simulation to give a different answer,” Peterson said. “However, in this paper, we show that we can break from this constraint.

“Unbounded by computer code, with each new thing it sees, the AI model gets smarter. In this sense, even experiments that don’t perform as expected are good experiments, since we can quantitatively learn from each new experience.

“Our simulations are the batting cages that teach our model how to hit,” Peterson said. “But our experiments are the big leagues, where we learn to play for real. Sometimes we strike out, but after each game we learn to hit a little better. And who knows? Maybe someday we’ll knock one out of the park.”

Joining Humbird, Salmonson, and Peterson on the Physics of Plasmas paper was design physicist Brian Spears.

—Jon Kawamoto

Follow us on Twitter: @lasers_llnl