Machine Learning Points to New Target Designs

January 9, 2019

Excerpted from an article by Ben Kennedy in the September 2018 issue of Science and Technology Review.

When the Trinity supercomputer at Los Alamos National Laboratory was first coming online, calls went out for research projects that would test—and potentially break—the new system. Researchers from Lawrence Livermore answered the call, and their work with Trinity and machine learning could disrupt 40 years of assumptions about inertial confinement fusion (ICF).

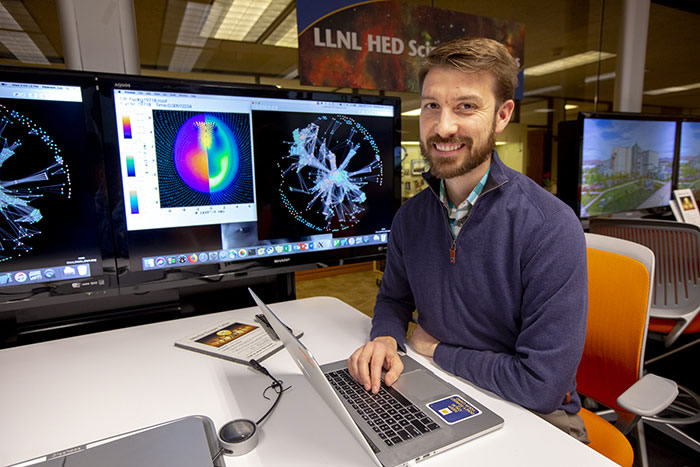

Luc Peterson (shown here) and Steve Langer harnessed the Trinity supercomputer to use machine learning to run simulations on target parameters. Credit: Jason Laurea

Luc Peterson (shown here) and Steve Langer harnessed the Trinity supercomputer to use machine learning to run simulations on target parameters. Credit: Jason Laurea “The theory of ICF was all done with pencil and paper, assuming a spherical implosion,” says design physicist Luc Peterson. “In many studies, if your implosion isn’t spherical, you’re not getting as much energy out of it as you could.”

ICF implosions at NIF are aimed at a spherical target housed in a cylindrical hohlraum, which creates an asymmetrical, preferred axis. To combat this tendency, Peterson was tasked with what he calls an impossible job: either make the implosions more round or create an implosion robust enough to withstand the inherent asymmetries and still achieve high energy yield.

“I listed all the ways that NIF could possibly implode something asymmetrically,” Peterson says. “I got a very large number of parameters and realized that to check all the different combinations, I would need to run many simulations—more than had ever been done before.”

The nine parameters included various asymmetries, drive multipliers, and gas fill densities—all factors that affect the quality of target implosion. Simulating all the permutations would produce five petabytes of raw data, which is close to the current limit for Livermore’s parallel file systems.

Steve Langer, Peterson’s colleague and a fellow Laboratory design physicist, heard about the effort to map out all nine parameters and conceived of a way to help.

Kicking the Tires

Langer’s idea involved Trinity, then a brand-new Cray XC40 system. Typically, before transitioning a new computer to classified work, a national laboratory holds an open-science period where researchers can “kick the tires” of the new system by running unclassified experiments. Laboratory technicians can also consult with the computer’s vendors as the experiments runand discover ways to fine-tune the system.

Langer’s plan was to process their raw physics data on the fly, analyzing and deleting files while they were being created, instead of saving all the data. Peterson and Langer pitched their big-data physics simulation proposal to Los Alamos, and a collaboration was born.

“We knew we would have to do some distillation to even store the results on disk, which prompted us to create this on-the-fly, in-transit system,” says Peterson. “We developed a system to perform the filtering while the simulations are running. The approach is like filling up a bucket with water while making a hole in the side to drain the bucket so it doesn’t overflow.”

Read the rest of the story.

Follow us on Twitter: @lasers_llnl