Efficiency Improvements - 2017

October

Automation Speeds and Smooths NIF’s Optics Recycle Loop

As the energy of NIF’s lasers—up to nearly two megajoules of ultraviolet light—impacts NIF’s highly damage-resistant fused silica optics, a small amount of tiny flaws, defects, and contaminants that remain can absorb the laser light and initiate damage that eventually will degrade the performance of the optic.

In the past, it took highly trained technicians an average of 6,000 mouse clicks or keystrokes and 6.3 work-hours to repair those optics.

But now, thanks to an ambitious automation initiative begun a year and a half ago by a team led by Mike Nostrand, process engineering lead for Optics and Materials Science & Technology (OMST), LLNL’s Optics Mitigation Facility (OMF) operators can accomplish those repairs in a fraction of the effort—using a little more than 300 computer interactions and in only one work-hour.

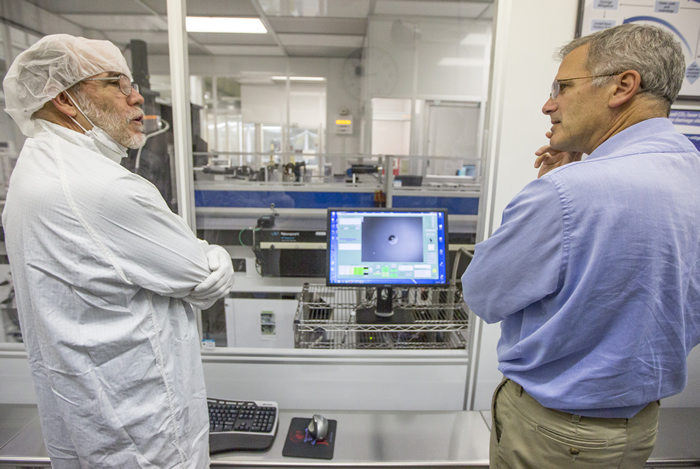

Electrical engineer and computer software architect Glenn Larkin (left) demonstrates the new automated optics damage mitigation system for NIF & Photon Science Principal Associate Director Jeff Wisoff. Credit: Jason Laurea

Electrical engineer and computer software architect Glenn Larkin (left) demonstrates the new automated optics damage mitigation system for NIF & Photon Science Principal Associate Director Jeff Wisoff. Credit: Jason Laurea The optics repair system has performed more than 300,000 individual mitigations (repairs) since 2009, Nostrand said. The average repaired site is just over one millimeter in diameter, so the cumulative area of all repairs combined encompasses only a 60-centimeter×60-centimeter area (approximately the area of a single optic) over an eight-year period.

The process to repair NIF optics is called the NIF Optics Recycle Loop. The optics are installed on the NIF laser, the operations team fires a shot, and the high-energy light can initiate damage, which can grow on subsequent shots. When damage growth reaches unacceptable levels, the optic is removed, cleaned and coated in the Optics Processing Facility, repaired in the OMF, and returned to service.

Surface damage sites are initially tiny, in the range of 100 microns or about the diameter of a human hair. If these “precursor” sites stayed small they wouldn’t be a problem; but under continued illumination by the laser, they can grow. After several shots, the area of a site can become large enough to disrupt the performance of the laser beam on the target and limit the optic’s lifespan.

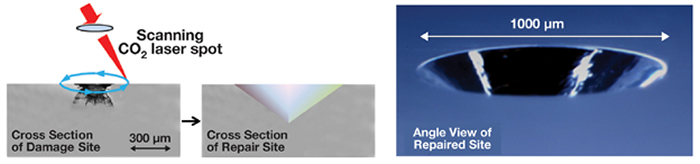

Once a damaged optic is removed, the OMST technicians locate the small damage sites using microscopes and high-resolution cameras. They use a CO2 laser to drill out conical sections around the damage sites. Currently about 20 optics a week are repaired through the NIF Optics Recycle Loop.

Schematic showing the NIF laser damage repair process, which uses a scanned, pulsed, and focused CO2 laser spot to evaporate a conical pit: (Left) cross-section view and (Right) angle view from top.

Schematic showing the NIF laser damage repair process, which uses a scanned, pulsed, and focused CO2 laser spot to evaporate a conical pit: (Left) cross-section view and (Right) angle view from top. Even though the original process is semi-automatic, repairing each optic still took multiple work-hours and required much human decision-making and computer interaction.

Fully automating the uniquely complex and precise mitigation equipment has taken more than a year, Nostrand said. The process required a multifaceted set of disciplines, including harnessing the “deep learning” algorithms of machine learning and tapping the Laboratory’s computational engineering expertise to train the computers to be able to replace human judgment for needed repairs.

“This is an important milestone,” said Tayyab Suratwala, program director for OMST. “This is the first time we’re using deep learning in a production mode, which will save significant effort time, allowing the team to be more effective.”

The result of the newly automated process for production is what OMF calls a “hands-off” approach, although it still requires humans to flip switches and provide oversight, Nostrand said. Automation frees up the workforce to leave the OMF Control Room and perform other tasks. No jobs were lost due to the automation, he said; instead, technicians gained cross-training and expanded their capabilities by learning new roles in the optics facility.

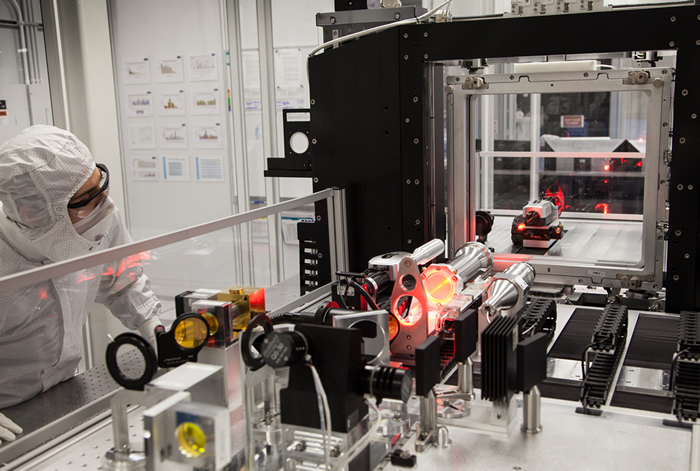

Optics Mitigation Facility operator Constantine Karkazis inspects the facility’s CO2 laser processing hardware. The NIF fused-silica optic is mounted within a large translation stage (the large black vertical structure). Inspection microscopes and laser delivery optics are in the foreground.

Optics Mitigation Facility operator Constantine Karkazis inspects the facility’s CO2 laser processing hardware. The NIF fused-silica optic is mounted within a large translation stage (the large black vertical structure). Inspection microscopes and laser delivery optics are in the foreground. Electrical engineers Glenn Larkin and Scott Trummer wrote the control system software to automatically maneuver the microscope and camera to the desired locations, acquire images, and based on the analysis of those images, apply the proper repair protocol to the site. Said Larkin, “What Scott and I have done is take an operator’s knowledge of what to do for a given image and embed that in a control system that can make decisions and take appropriate action when it is possible to do so. Now, instead of executing 6,000 mouse-clicks in a session, which is kind of an ergonomic challenge, it’s down to about 300.”

Laura Kegelmeyer, an engineer who is team lead for NIF Optics Inspection Analysis, worked on the machine-learning software for the pre-mitigation inspection. In essence, Kegelmeyer trained the computer to understand what kind and size of damage is present in an image and where it’s located. She used image analysis techniques to extract the information from thousands of previous inspections, then used those data to grow ensembles of decision trees capable of making a human-level assessment. This type of machine learning uses years’ worth of past statistics to infer how to assess the damage in a new image, she noted.

“Decision trees require feature extraction via image analysis,” she said. “I have to put a lot of work into deciding what information to feed the decision trees and how to extract it. The machine learning results provide data to the control system so it can apply the correct repair protocol.”

Once applied, the optic repair must be checked to ensure it is complete, with no damage remaining, Kegelmeyer said. “Evaluating a single image of the final repaired site is a very subtle problem that can be inconclusive for humans without watching an entire video of the repair.

“It’s also a very hard problem for traditional image analysis and machine learning,” she added. “But because we needed only a pass/fail type of assessment and had many thousands of labeled images from past repairs, this part of the project was very well suited to deep learning.”

Software architects Glenn Larkin (right) and Scott Trummer (center) instruct lead operator Constantine Karkazis (left) on how to use the new automation software that will no longer require his full-time presence in the control room. Credit: Jason Laurea

Software architects Glenn Larkin (right) and Scott Trummer (center) instruct lead operator Constantine Karkazis (left) on how to use the new automation software that will no longer require his full-time presence in the control room. Credit: Jason Laurea Kegelmeyer brought in LLNL colleague Nathan Mundhenk from the Computational Engineering Division to leverage what’s called transfer learning, which involves starting with “deep convolutional neural networks developed for millions of labeled images from the Internet,” she explained. “The networks have already figured out how to distinguish between, for example, a cat and a dog, what’s an ear, an eye, what’s fur and skin, and (we) retrained them to distinguish a perfect repair site from one with remnant damage.

“This is what now puts the final stamp of approval on our hands-off process,” she said.

The Optics Mitigation Facility, commissioned in 2009, has four stations, each equipped with a CO2 laser and a large optic translation stage. To locate the small damage sites to be repaired, the facility utilizes NIF’s Final Optics Damage Inspection (FODI) system, a massive modular camera that sits at Target Chamber center and can look back through every beam line, monitor the condition of the final optics, and alert researchers to the presence of damage precursors and their rate of growth. It’s a highly mechanized process, Nostrand noted.

The mitigation process involves mounting the optic on a stage and moving the optic to the coordinates identified by FODI. Once the machine arrives at the damage site, a microscope is inserted and an image is taken of the affected area. Based on this image, rules of engagement are applied to decide how to handle the repair.

“The difference now is we have the experience to be confident our rules of engagement are robust, and trust the computer to apply these rules instead of the human,” Nostrand said. “The computer is now making all those decisions for us and automation is performing the mitigation. Analogous to a self-driving car, we have removed the driver from the driver’s seat.”